NewScientist (11 November 2017) reports on a problem with AI object recognition:

WHEN is a turtle not a turtle? This is a conundrum for an AI trained to identity objects. By subtly tweaking the pattern on the shell of a model turtle, Andrew Ilyas at the Massachusetts Institute of Technology and his colleagues tricked a neural network into misidentifying it as a rifle. The results raise concerns about the accuracy of face-recognition tech and the safety of driverless cars.

Previous studies have shown that changing just a few pixels in an image – alterations that are imperceptible to a human – can throw an AI off its game, making it identify a picture of a horse as a car, or a plane as a dog. The model turtle now shows that an AI can be made to misidentify an object even from multiple angles.

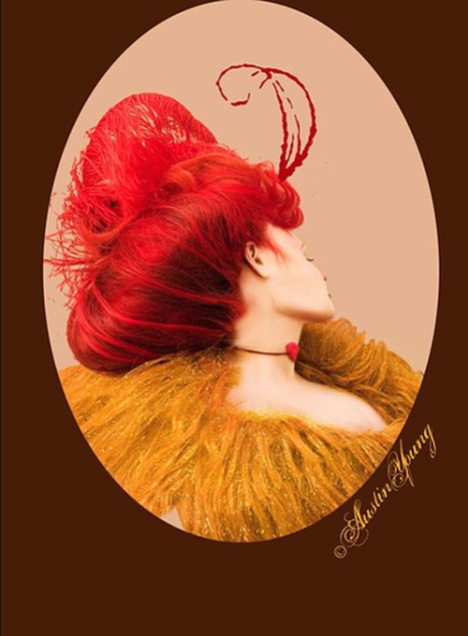

Source: Optical Illusion

By simply proving the AI can be misled through a very simple approach, we know there’s a problem.

But what’s the source of the problem? Neural networks are notorious for obscuring the actual decisions used to come to any particular conclusion. My suspicion is this could be an attempt to analyze every pixel of the picture, rather than aggregatizing and pattern matching.

And keep in mind that humanity isn’t perfect at this, either. Just one example is the optical illusion of the young woman or old hag (today I’m only seeing the young woman), indicating that visuals can be difficult even for members of species that have evolved for millions of years.

However, the simplicity of the trick on the AI indicates they have a way to go.